Events

UC Santa Cruz team chosen to compete in Amazon’s Alexa Prize Challenge

By Tim Stephens

UC Santa Cruz

December 9, 2016 — Santa Cruz, CA

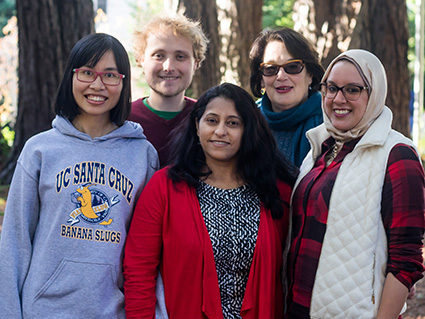

(Photo above: The UC Santa Cruz SlugBots team: Jiaqi Wu, Kevin Bowden, Amita Misra, Marilyn Walker, and Shereen Oraby. Credit: Yin Wu)

UCSC computer scientists win sponsorship from Amazon to develop a “socialbot” that can converse with humans

A team of UC Santa Cruz graduate students led by computer science professor Marilyn Walker has been chosen by Amazon to compete in the inaugural $2.5 million Alexa Prize Challenge competition.

The UCSC team, called SlugBots, is one of 12 university teams sponsored by Amazon to try to develop a “socialbot” that can converse coherently with humans using Alexa, the voice-controlled digital assistant in Amazon’s Echo and other devices. Amazon is giving each of the 12 teams, selected from more than 100 applicants, a $100,000 stipend, Alexa-enabled devices, free access to Amazon Web Services, and support from the Alexa team.

The team with the highest-performing socialbot will win a $500,000 prize. An additional prize of $1 million will be awarded to the winning team’s university if their socialbot achieves the grand challenge of conversing coherently and engagingly with humans on popular topics for 20 minutes.

“Dialogue interaction has always been the core interest of my lab, so this challenge fits well with the research projects my students are working on,” said Walker, who heads the Natural Language and Dialogue Systems Lab in the Baskin School of Engineering at UC Santa Cruz.

Conversational AI

The SlugBots team consists of four computer science graduate students in her lab: Kevin Bowden, Shereen Oraby, Amita Misra, and Jiaqi Wu. Bowden, the team lead, was already working on a socialbot project, although with a narrower focus than the Alexa Prize competition. The SlugBots approach builds on this and other ongoing work in Walker’s lab on various aspects of what is sometimes called “conversational artificial intelligence.”

For a digital agent to carry on a conversation, it has to be able to make sense of what is said to it; find relevant information and, based on the context, find potential content for a response; then choose appropriate content for the response from a range of possibilities; and finally construct a coherent and grammatical spoken response.

“Those are the high level tasks, and each one is a big research problem on its own,” Oraby said. “And then you want it to be unpredictable and engaging, so it doesn’t seem to be mechanically plugging information into slots.”

In addition to Amazon’s Alexa, the digital assistants now on the market include Apple’s Siri, Google Assistant, and a growing number of others. But none of them can really carry on a conversation.

“Research on conversational agents is exploding right now, and there are tons of people working on the problem,” Walker said. “That’s a good thing, because there is a lot of room for improvement. I expect our work here at UCSC on expressive language generation, understanding informal language, and statistical approaches to dialogue management to be key to making SlugBots competitive. I am so proud of my team for being selected out of over 100 teams that applied.”

Much of the work in Walker’s lab is data driven and involves mining social media to compile a large database of examples of social language and casual dialogue, and then analyzing and annotating them so they can be used to train computer algorithms using machine learning techniques.

Sarcasm and humor

Things like sarcasm and humor are especially challenging for a computer algorithm to identify or to use. Oraby’s thesis research has focused on identifying and classifying different styles of expressing sarcasm, and developing algorithms both to detect it in the user and to use it in a response. “It can give the socialbot personality, but it’s important to know when it’s appropriate,” she said.

Wu is tackling another aspect of natural speech that digital agents have trouble with: emotional content, or how a person feels about a situation or event. Simply identifying whether something is good or bad is difficult unless the speaker uses words with clear positive or negative connotations. “For example, if you tell Siri you broke your leg, it doesn’t respond appropriately,” Wu said.

Misra’s research focuses on argumentative dialogue and involves analyzing debates on social media. “We have these huge dumps of data from social media sites, and we want to develop an algorithm that can extract the arguments used by different sides in debates on key topics like gun control or the death penalty,” she explained. “Alexa could then analyze what the user is saying, identify their position, and present different arguments.”

“We’re interested in features we can use to give personality to the socialbot to make the conversation more engaging,” said Bowden, whose research includes work on an algorithm to generate dialogue from a narrative, with variations that alter the personality represented by each speaker.

The teams will get feedback from Alexa users to help them improve their algorithms. Beginning in April 2017, as part of the research and judging process, Alexa customers will be able to converse with a socialbot about a current topic of interest to them, such as sports, politics, celebrity gossip, or scientific breakthroughs. The feedback from Alexa users will be a factor in selecting the best socialbots to advance to the final round of the competition. Winners will be announced in November 2017.

###

Tagged UC Santa Cruz