Featured

The Gregarious Machines

by Paige Welsh

How UCSC researchers are making computers more human

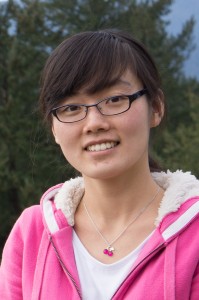

If you’ve ever tried to have a conversation with your phone Joaquin-Pheonix-style, you know that Siri is a pretty frigid date. Computers really struggle with personality. Zhichoa Hu, a Ph.D. student in Computer Science at UC Santa Cruz, is trying to change that. She’s making our computers more personable by blending linguistics and psychology with programming. Computers may diversify into the introverted and the extroverted, the conscientious and the careless, the helpful and the spiteful, and much more. Your computer can be your pal.

Hu’s dream is to make a virtual agent or avatar that will adapt to the personality of the user to make human-technology interactions feel more natural.

“These kind of virtual agents will come into daily use. I don’t think today’s virtual agents are very mature. I personally don’t like interacting with Siri a lot,” says Hu.

Zhichoa Hu, a Ph.D. student in UCSC’s Computer Science program, is trying to make a pal out of your computer. (contributed)

Previous studies show that people believe their machines are more intelligent if they have a similar personality. However, most machines don’t have a personality period, let alone a similar one to a user. Hu and her team are diving into social science to fix this.

It’s the little words that count

We express our personalities through phrasing and body language. Think about all the little words you use in conversation. “Yeah” and “Right” acknowledge ideas. “Pretty” and “Sort of” mitigate the potency of ideas.

Let’s say two different people went to a restaurant and each had a bad experience. One person might say, “That place? The service was slow, and when they did come around, they were rude! Don’t waste your time.” Another may say, “It’s not that great, I guess. It’s just that the service took a while to come and they did, umm, they just weren’t that nice, you know?” They conveyed the same experience but the person who used more phrases like, “I guess” and “you know” came off as more shy.

It gets even more complicated once you put two personalities in conversation together. For example, two extroverts will feed each other’s enthusiasm and their gestures will grow wider and wider. If an extrovert converses with an introvert, they’ll narrow their body language to better match the introvert. We also entrain, or parallel the sentence structures of whomever we’re talking to.

If my friend says, “Is it just me, or did the waiter look like an angry turtle when he saw the tip,” I’m probably going to say, “Yeah, but he looked like an angry turtle before he saw the tip.” The word “waiter” appear before “tip” as opposed to a sentence like, “Before he saw the tip, the waiter looked like an angry turtle.” It’s subtle, but these phrasing choices make conversation smoother and more agreeable.

With this in mind, Hu can program software to inject words like “Yeah” at choice moments and entrain the user’s phrasing. Hu says all the research her team has put into the psychology of conversation is changing the way she thinks about her daily interactions.

“I tend to observe people more when they’re talking. Those extroverted people, they really move!” says Hu.

How much humanity do we want?

Before Hu and her team go ahead and program Siri to say, “I can totally find like six taco places in your area,” they’re researching when we want computers to act like people.

Hu conducted a study in which she gave participants a real transcribed dialogue between a person giving directions over the phone and a pedestrian. Hu gave the study participants five sentence options to fill a blank in the conversation. For example, when the pedestrian asked, “Yeah, ok so at Cedar I hang a right?” potential responses included a spectrum from the very human, “Yeah, at Cedar hang a right,” to the very robotic, “Yes. Turn right onto Cedar.” Participants rated the options naturalness.

Hu was surprised to discover that participants rated the directions that hadn’t been edited by her software to sound human as the least natural. Hu and her team did a follow up study where instead they asked respondents to rank friendliness. Participants ranked the directions modified to sound human as the friendliest.

Hu suspects that people thought the bland directions were “natural” because they were accustomed to concise directions from GPS devices. However, the result raises questions about when we want our computers to act like people. Think about the last time a human being gave you directions. Were those directions better even if they were friendlier?

Too close for comfort

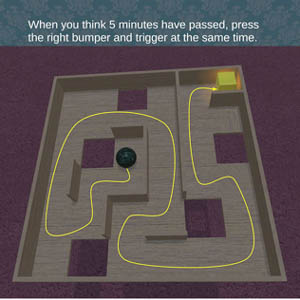

Image conversing computers: a screen shot of the avatars Hu is using to test programmed personality. (contributed)

Hu’s work won’t just make better companions out of our smart-phones. It can potentially generate diverse casts of non-player-characters in video games. In Skyrim, a role-playing adventure game, just about every castle guard has a misty-eyed story of his glory days before he took a fated arrow to the knee. It’s a funny idiosyncrasy, but the cut-and-paste character is a reminder that the player is pecking at a keyboard instead of slaying dragons. Unique interactions between non-player characters based on their personalities can enhance the overall gaming experience.

Hu shows her work in this area by pulling up a window in which two human avatars, reminiscent of characters from The Sims, engage in conversation. They do mirror each other’s body language though but they aren’t convincing anyone right now. They have impeccable posture, but move with the rigidness of Pilates-class refugees.

The testing of these demos gets mixed results. Initial avatars had no faces, and study participants rating the friendliness of the avatars were so uncomfortable with faceless avatar they could not fairly assess the body language. Even slight faults in human imitation makes us feel “creeped-out” because we have a life time of experience to tell us what’s normal.

In a more recent trial where the avatars had faces, a timing issue threw off the conversation.

“[I asked], ‘How do you think this video is? Can we use this for our user evaluation?’ [The lab was] like, oh my god, they’re dancing!” says Hu. The avatars would immediately mirror each other’s movements and thus they swayed in sync. Hu is now working on delaying the avatar’s response so they no longer square dance while talking.

The newest writers

Kevin Doyle, an undergraduate assisting Hu, is working on software that can turn a blog post style of text into a conversation between two people. Such software could generate convenient teaching tools because people are more likely to stay engaged in a dialogue than a monologue. As of now, Doyle’s programming splits the monologue up into something akin to a play script with added words and entrainment. He plans to create two distinct characters by programming in personality next.

However, Doyle isn’t convinced writers have to worry about their jobs anytime soon.

“Creative writing, human writing, it speaks to the human soul. It’s created for people by people because of a mutual understanding,” says Doyle.

Perhaps we’ll never need computers to write novels, but if human personality is built from a lifetime of interactions, is a set of programmed instructions ever going to be a comparable substitute? We’ll have to keep asking our phones questions and see.

###

Paige Welsh is a marine biology major and literature minor at UC Santa Cruz. She can be contacted at phwelsh@ucsc.edu.

Tagged UC Santa Cruz